Have we made a positive difference?

by Felisa L. Tibbitts

09/10/2017

© Illustration by Siiri Taimla

by Felisa L. Tibbitts

09/10/2017

“Have we made a positive difference?” is the question we ask ourselves at the end of a training as we pass out the evaluation forms. Aren’t we ever hopeful that the training experience has “stuck” and left some lasting impression with participants? Impact is an area that funders will generally require us to address as our programmes conclude.

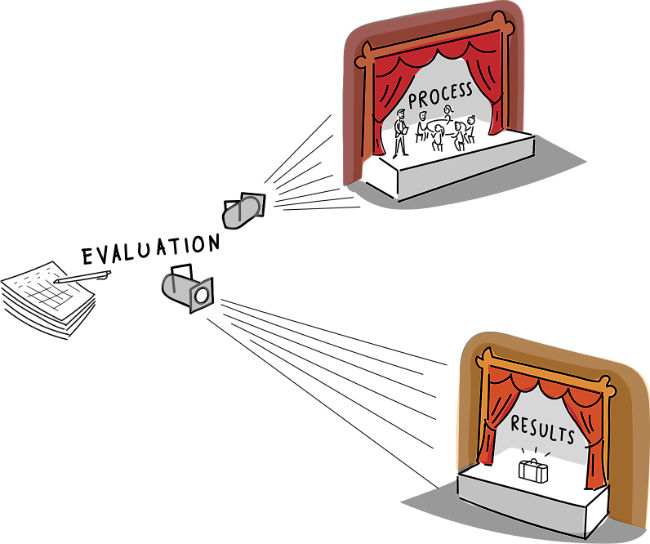

The systematic collection of information so that we understand how we carried out our activities, what learning took place alongside these, and how well we have moved ourselves and others towards our goals is what evaluation is all about. It is about learning from what we do. That learning means having documentation and reflecting on what was successful and why, and what tasks might be replicated in the future.

Project evaluation may feel like a burden, as if it is something that we do primarily for the benefit of ourselves. However, evaluation is a feature of our commitment to an internal process of continuous learning. So as we discuss evaluations in our organisations, it might be more helpful to think about what we might learn through our evaluations and not just how we will try to show impact.

When we carry out trainings, we are already doing some kind of non-formal assessment by paying attention to things like if participants are engaged, or if they are able to carry out activities that we ask them to. Ultimately, however, we want to know about what learners are thinking, how they are interpreting their experiences and, ultimately, what difference their engagement in our projects may make at a later point when they are no longer in our sights.

On occasion I have the good fortune to run into a learner I worked with years before. Sometimes they mention how valuable the experience was or how much they have been able to apply it. Of course, this is gratifying and would be captured in the “results” basket of an evaluation if I had been able to administer a follow-up survey to the training. This is one of the reasons to do long-term evaluation studies: to see “what sticks”.

But evaluations will not necessarily confirm that we have reached our results. This is particularly the case when we are working in highly complex environments, where the medium- and long-term results of our training efforts will be influenced by a range of environmental conditions and actors.

Here are some of the things that I have learned from short-term studies carried out on my programmes. (I won’t say which ones!)

These examples illustrate the ways in which evaluations can help us in understanding the processes and results of our programming. It’s about getting a continuous “reality check” on our efforts and improving as we plan ahead.

There are many guides and toolkits available to practitioners to consult in designing educational evaluations, some of which are listed at the end of this article. The resources I’ve included here have been developed for the field of EDC, HRE and leadership development. If these don’t meet your needs, you might want to look for other evaluation resources or supports, for example, around youth leadership or social networking.

© Shutterstock.com

Most evaluation studies involve mixed methods, that is, a combination of qualitative data (for example, through interviews and observations) as well as quantitative data (from surveys and regular project data). Other techniques include observations and content analysis of key documents. The methods we decide to use flow from what we really need to document. This brings us back to what we want to learn.

We can use backward mapping when we develop our evaluation designs, beginning with the stated goals and objectives of our projects. In our evaluation, we will want to document if we have been able to reach our anticipated goals. This is a “results evaluation” or “outcomes evaluation”.

We may also want to pay attention to whether or not our project processes transpired the way we expected in terms of reaching our goals. Each project has some version of an underlying “logic chain” regarding the relationship between our project processes (our “interventions” such as trainings) and the results we are looking for. We probably also have some ideas about the change processes we are fostering, including those related to learning. Were our assumptions about these processes correct? This is known as a “process evaluation”.

As part of project management we’ll also be documenting our activities. Common activity outputs for EDC/HRE training programmes are the number of trainings, participants (disaggregated by background), publications, partnerships, etc.

After we have identified what we would like to document, we then need to figure out how best to capture this information. This is called data collection methodology, and it brings us back to data collection methods.

Allow me to share an example based on the online monitoring and evaluation course I teach for human rights education. If I were to develop a monitoring and evaluation plan for this online course, I would first want to monitor all of the “inputs” from the HREA side. These would include the syllabi, the course website, the readings, weekly instructor messages and feedback. Measuring whether or not these were given to learning is very straightforward. If we wanted to assess the quality of these inputs, we might ask for learner feedback. We might also devise other means of assessing quality, for example, how current the readings are.

© Illustration by Siiri Taimla

An evaluation of the course could have two general dimensions. One might be process oriented. Did learners engage with the course? Did they participate in the weekly online discussions and submit written work? We can document this quite readily through the work done on the course website.

The other dimension has to do with learner results. In the short-term, HREA would like the learners to acquire new knowledge such as key evaluation terms and skills related to developing a logical framework. These results can be documented through pre- and post-survey evaluations of learners. We might also assess before-after logical frameworks. In the short- and medium-term we might look for course alumni to apply their learnings. We could explore this through surveys as well as interviews, and try to understand not only the “good examples” but instances where alumni did not apply skills they learned.

A final point I’d like to make in this “how to do it” section has to do with our ways of working with people. In the field of EDC/HRE, evaluation processes are now being held to the light in the same way that project development is. Are our evaluation processes participatory and transparent? Do beneficiaries have the opportunity to weigh in on evaluation design and potentially help with data collection and analysis (not be involved only as a data source)? These ethical considerations have to do with an increasing recognition among evaluators to be not only culturally sensitive but to genuinely collaborate with participants in evaluation processes. This is in the true spirit of EDC/HRE!

The knowledge base about EDC/HRE practices continues to grow, especially as those in higher education institutions have engaged in this research. I’m sure that there is a great deal of wisdom in Final Reports that others in the field will never benefit from because such reports are only filed with funders!

Within and across our EDC/HRE projects there are a range of themes that you might evaluate. These include learners and learning theory; trainers and capacity development; learning materials; and environmental conditions. I’m sure that you can think of many more topics!

I will briefly address only one here – Learners and learning theory – just to illustrate the kinds of areas that you might research as part of your project but might also contribute to a wider understanding of EDC/HRE practice. (This is called “building the evidence base”. Please think about doing this!)

@ Illustration by Siiri Taimla

1. Which dimensions of change do we want to try to assess? What new knowledge? What about attitudes and skills related to agency? What kinds of skills might we see learners applying?

Comment: Kirkpatrick’s four levels of evaluation is very helpful for designing evaluation of training programmes. This evaluation approach is presented in the Equitas manual.

2. What kinds of learner backgrounds is it relevant for us to be aware of when designing (and evaluating) our EDC/HRE programming? What kinds of background differences most positively and negatively influence EDC/HRE learner outcomes?

Comment: If we have enough participants in our programme, and they differ from one another in background features that you consider to be important (e.g. gender, ethnicity, age) you might try to design your evaluation in such a way that you can see if your project is more or less “successful” with participants taking into account these background features. In order to “test” for significant differences in project results on the basis of background features, you would need to be able to represent project impact quantitatively and run some statistical analysis.

3. What kinds of methodologies are most effective for bringing about EDC/HRE learner goals, and what are the supports and barriers for implementing such learning processes?

Comment: Participatory methods are commonly used in EDC/HRE activities and when done well they are highly engaging. However, the methods should also be instrumental in our trying to achieve goals for learners, such as retaining new knowledge, developing confidence in communication skills or understanding the complexity of social issues.

4. In what ways does educational psychology inform our view of EDC/HRE learning processes?

Comments: Adult learning theory highlights the importance of drawing on adult learners’ prior experience and using experiential methods. Our research can show if such methods are being used effectively in our trainings. Educational psychology also keeps us focused on the learners’ perspective.

If any of these questions interest you, know that they interest others as well. Scholarship on these topics can be found in numerous academic journals, many of which are migrating to “open access”, which means that you won’t need to have a paid subscription in order to access them. (Here is a link to a directory of open access journals: https://doaj.org/.) We can also find some materials published on websites, such as the Council of Europe.

The EDC/HRE field continues to evolve. Every time we innovate in our own work, there is an opportunity to learn something new. If you have been a trainer for more than 10 years, think back on your own experiences. What are some of the things that you do differently now and where did this innovation come from? Perhaps it came from your own learned experience and perhaps it also came from ideas from the “outside” or new challenges that your programming rose to address.

A new feature of the EDC/HRE environment is the new requirement of states to self-report to the United Nations on their accomplishments vis-à-vis Sustainability Development Goal 4.7 and Global Citizenship Education. Perhaps this is far away from our field work. However, these policy frameworks provide an added incentive to think about measurement.

Before I conclude this article, I should mention that we continue to be plagued by a lack of data on the long-term effects of participation in EDC/HRE programming. This is a very complex problem and I fear that I will be too discouraging if I present you with all the reasons why!

One of the reasons is simply that project developers may not think of organising long-term studies. If you are engaged in an EDC/HRE programme that will have lasting impact on learners, try to build in data collection that will take place sometime after the learners’ involvement in the project. This could be a simple survey (ideally with some of the same questions from earlier ones you administered in the project, so that you can compare results), as well as interviews.

Another reason we don’t have much long-term data relates is because it is complex. It’s challenging to identify (a) what we think will “stick” with our learners even one year after an EDC/HRE learning experience; (b) what other learner experiences in the meantime might influence the results that we’d like to attribute to our programme; and (c) some of the results we might care about most are difficult to measure. Here is where you, your colleagues and your learners will want to think together about how to address the above. You may also want to look for similar studies to see how they were carried out.

© Shutterstock.com

Evaluations do have technical aspects to them but for the most part they have to do with good thinking: looking for clear answers about how our work was carried out and what transpired. They also require stamina in remaining open to learning from whatever the documentation presents us with!

EDC/HRE oriented

The Council of Europe has various publications on the implementation of the EDC/HRE Charter, including from the Pilot Project Scheme, which incorporate learner assessment and evaluation.

Amnesty International-IS (2010), Learning from our experience: human rights education monitoring and evaluation toolkit, Amnesty International Publications, London.

Equitas and Office of the UN High Commissioner for Human Rights (2011), Evaluating human rights training activities: a handbook for human rights educators, Office of the UN High Commissioner for Human Rights, Geneva.

Office of the UN High Commissioner for Human Rights (2016), A human rights-based approach to data: leaving no one behind and the 2030 development agenda, Office of the UN High Commissioner for Human Rights, Geneva.

General guides

Goyal R., Pittman A. and Workman A. (2010), Measuring change: monitoring and evaluating leadership programs: a guide for organizations, Women’s Learning Partnership, Bethesda, MD.

Grantcraft (2011), Mapping change: using a theory of change to guide planning and evaluation, Foundation Center and European Foundation Centre, New York and Brussels.

Lee K. (2007), The importance of culture in evaluation: a practical guide for evaluators, The Colorado Trust, Denver, CO.

UNICEF (2000), Selecting indicators, UNICEF, available at: www.ceecis.org/remf/Service3/unicef_eng/module2/part3.html

Do you have comments on the article?

Use the Feedback form to give your comments and/or visit our Facebook page!

Partnership between the European Commission and the Council of Europe in the field of youth

c/o Council of Europe / Directorate of Democratic Citizenship and Participation Youth Department / F-67075 Strasbourg Cedex, France

c/o Council of Europe / Brussels office / Avenue des Nerviens 85 / B-1040 Brussels / Belgium